Aerial Sports Videography with Natural Language Instructions

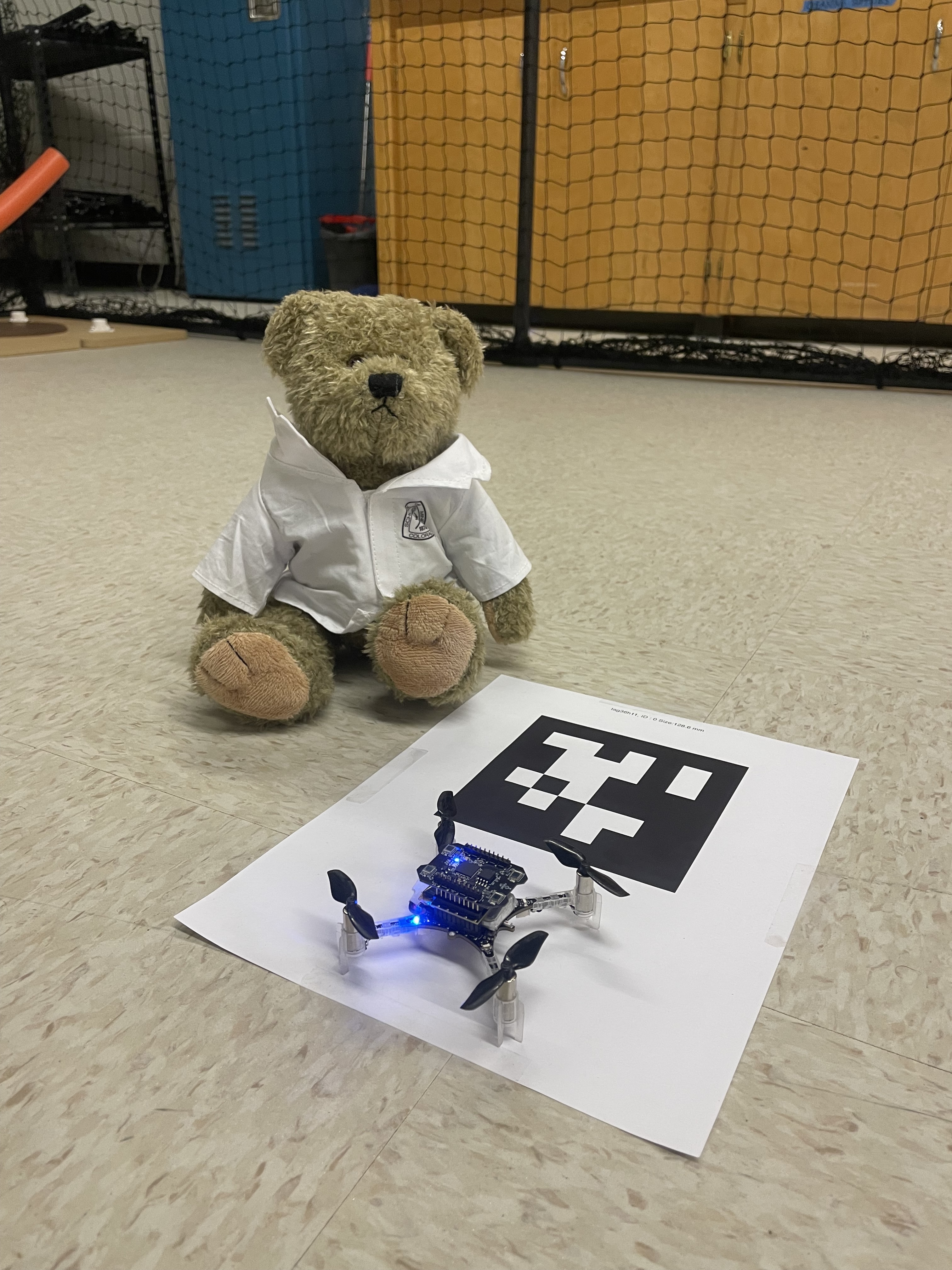

This project aimed toward building a drone-based system for aerial videography that can be instructed purely with natural language. In particular, the project focuses on semi-autonomously filming moving objects in a sports videography context. The system is based on lightweight CrazyFlie drones and an external RealSense depth which are ideal for indoor operation. We utilize a pre-trained vision model and a Large Language Model (LLM) to model objects states and guide the drones in the filming task. An off-board computation setup enables real-time object detection and the translation of natural language inputs into machine-level instructions for drone navigation. The system includes a web-based interface for user interaction and can also run in simulation. Its capabilities have been evaluated through laboratory experiments in a simplified environment.

People

Dr Micah Corah

Interests: Aerial robotics, Active perception, and multi-robot systems with applications to aerial videography